Adolescent and adult mice use both incremental reinforcement learning and short term memory when learning concurrent stimulus-action associations

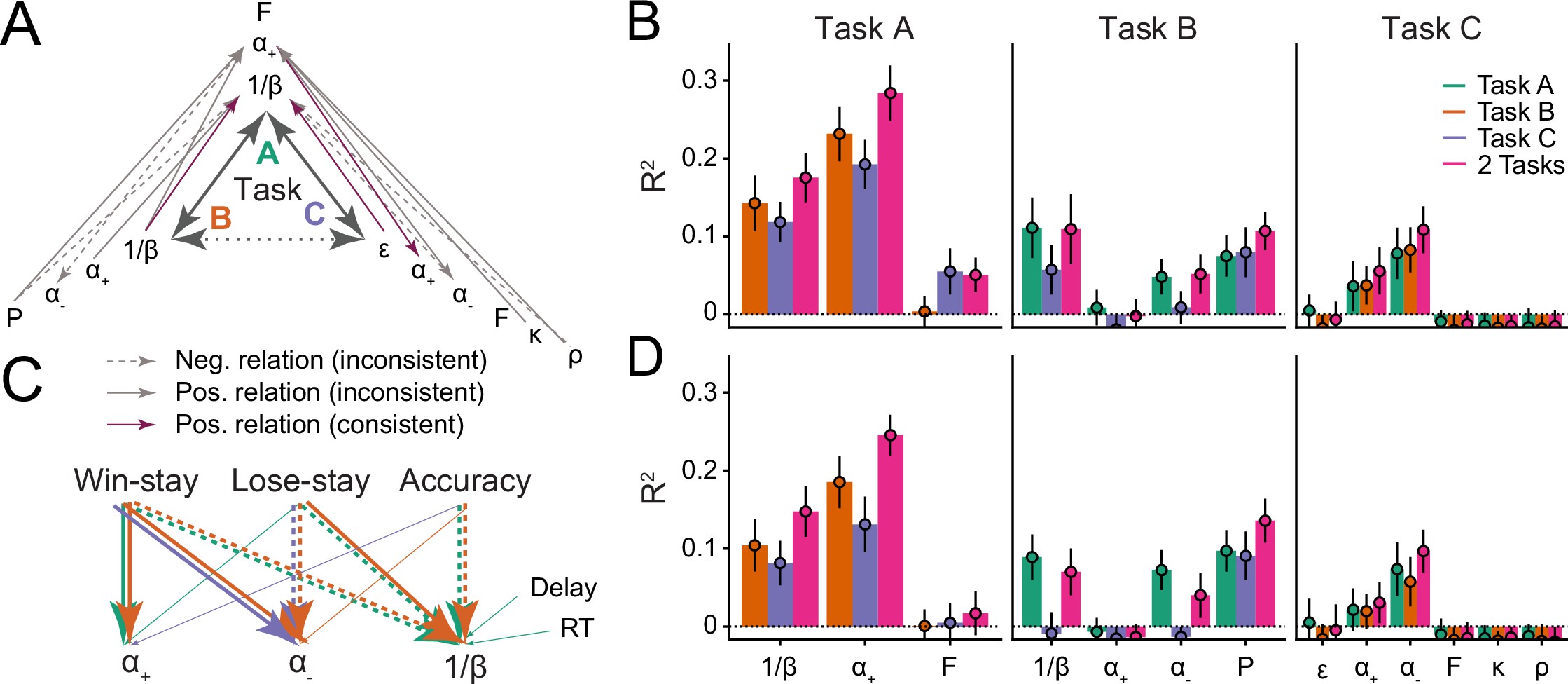

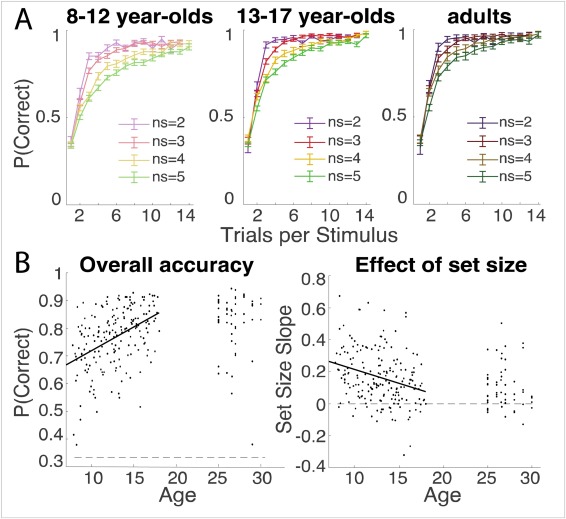

Computational modeling has revealed that human research participants use both rapid working memory (WM) and incremental reinforcement learning (RL) (RL+WM) to solve a simple instrumental learning task, relying on WM when the number of stimuli is small and supplementing with RL when the number of stimuli exceeds WM capacity. Inspired by this work, we examined which learning systems and strategies are used by adolescent and adult mice when they first acquire a conditional associative learning task. In a version of the human RL+WM task translated for rodents, mice were required to associate odor stimuli (from a set of 2 or 4 odors) with a left or right port to receive reward. Using logistic regression and computational models to analyze the first 200 trials per odor, we determined that mice used both incremental RL and stimulus-insensitive, one-back strategies to solve the task. While these one-back strategies may be a simple form of short-term or working memory, they did not approximate the boost to learning performance that has been observed in human participants using WM in a comparable task. Adolescent and adult mice also showed comparable performance, with no change in learning rate or softmax beta parameters with adolescent development and task experience. However, reliance on a one-back perseverative, win-stay strategy increased with development in males in both odor set sizes. Our findings advance a simple conditional associative learning task and new models to enable the isolation and quantification of reinforcement learning alongside other strategies mice use while learning to associate stimuli with rewards within a single behavioral session. These data and methods can inform and aid comparative study of reinforcement learning across species.

Juliana B. Chase, Liyu Xia, Lung-Hao Tai, Wan Chen Lin, Anne G.E. Collins, Linda Wilbrecht, Adolescent and adult mice use both incremental reinforcement learning and short term memory when learning concurrent stimulus-action associations, bioRxiv 2024.04.29.591768; doi: https://doi.org/10.1101/2024.04.29.591768